Quantum Computing Breakthrough: Error Correction by Late 2025?

The potential for achieving robust quantum error correction by late 2025 is a topic of intense research, with scientists exploring various architectural and algorithmic advancements crucial for building fault-tolerant quantum computers.

In the rapidly evolving landscape of quantum computing, a significant question looms large: will a true Quantum Computing Breakthrough: Is Error Correction Finally Achievable by Late 2025? This isn’t just a technical challenge; it’s the gateway to unlocking the technology’s revolutionary potential, moving beyond current noisy intermediate-scale quantum (NISQ) devices into an era of truly fault-tolerant quantum computation.

The Imperative of Quantum Error Correction

Quantum computers promise to solve problems intractable for even the most powerful classical supercomputers, from drug discovery and material science to financial modeling and artificial intelligence. However, these machines are exceptionally delicate. Qubits, the fundamental building blocks of quantum information, are highly susceptible to noise and decoherence from their environment, leading to errors that corrupt computations.

This inherent fragility means that without robust error correction, large-scale, reliable quantum computation remains out of reach. Current quantum processors, often referred to as NISQ devices, operate with limited numbers of qubits and suffer from high error rates, restricting them to specific algorithms and short computational timelines. The dream of harnessing quantum mechanics for transformative applications hinges on our ability to shield these fragile quantum states from environmental interference, a monumental engineering and theoretical endeavor.

Understanding Quantum Noise and Decoherence

Quantum noise arises from uncontrolled interactions between qubits and their surroundings, causing quantum states to lose their coherence – the property that allows them to exist in superpositions and entanglement. Decoherence effectively collapses the quantum state prematurely, destroying the quantum advantage. Various sources contribute to this noise:

- Thermal fluctuations: Even at cryogenic temperatures, residual heat can impart energy to qubits.

- Electromagnetic interference: Stray electromagnetic fields can disrupt delicate quantum states.

- Material imperfections: Defects in the physical substrates and wiring can introduce errors.

- Control errors: Imperfect calibration of laser pulses or microwave signals used to manipulate qubits.

Mitigating these effects is paramount. Techniques such as isolating qubits in vacuum, deep cryogenic cooling, and using superconducting materials are some of the physical approaches. However, these alone are not enough to achieve the incredibly low error rates required for large-scale computations.

The challenge isn’t merely about reducing individual qubit errors but managing the cascading effects of errors across an entire quantum system. Unlike classical computers, where errors can often be corrected by simply duplicating data and voting on the correct value (e.g., three bits, two agreeing), quantum states cannot be perfectly copied due to the no-cloning theorem. This fundamental property necessitates a very different approach to error correction, one that encodes quantum information redundantly across multiple physical qubits.

The Promise of Fault-Tolerant Quantum Computing

Fault-tolerant quantum computing (FTQC) is the ultimate goal, a paradigm where computations can run reliably despite the presence of noise. This is achieved by encoding a single logical qubit across many physical qubits. Errors on individual physical qubits can then be detected and corrected without disturbing the encoded quantum information. The threshold theorem of quantum computation suggests that if the physical error rate is below a certain threshold, it is theoretically possible to perform arbitrarily long quantum computations with high fidelity.

Reaching this fault-tolerant regime is not just a gradual improvement; it represents a conceptual leap. It means moving from performing short, noisy quantum experiments to running complex algorithms with millions of operations. The distinction between NISQ and FTQC devices is critical: NISQ devices are like early, unreliable calculators, while FTQC machines hold the potential to be truly programmable and scalable tools capable of solving real-world problems beyond the reach of classical methods.

The ambitious timeline of late 2025 for achieving error correction viability raises significant discussions within the scientific community. While substantial progress has been made, the jump from current capabilities to a truly fault-tolerant system within such a short timeframe underscores the intense research, innovation, and investment pouring into this field. It’s a race against fundamental physics, demanding both theoretical breakthroughs and ingenious engineering.

Architectural Approaches to Quantum Error Correction

The pursuit of quantum error correction (QEC) is not a one-size-fits-all endeavor; various approaches are being explored, each with its own advantages and challenges. The strategy chosen often depends on the underlying qubit technology, whether it’s superconducting circuits, trapped ions, topological qubits, or others. All methods share the common goal of encoding quantum information redundantly across multiple physical qubits to protect it from noise.

One of the most prominent approaches is the surface code, popular due to its high error threshold and the local nature of its error detection and correction operations. This code arranges qubits on a 2D lattice, where information is encoded in a topological manner, making it robust against localized errors. The surface code and its variants, like the color code, are particularly appealing for their potential scalability and compatibility with planar fabrication techniques, which are more familiar in semiconductor industries.

Topological Quantum Error Correction (TQEC)

Topological codes, such as the surface code, are a class of QEC codes that encode quantum information in a non-local manner, often related to the topology of the system. This means that information is not stored in individual qubits but rather in collective properties of many qubits, making it highly robust against local disturbances. Errors on a few individual qubits within the encoded block do not immediately destroy the logical information; instead, they generate “excitations” that can be moved and measured.

The beauty of topological codes lies in their inherent resilience. Imagine a knot in a rope: you can tug and pull on various parts of the rope, but the knot itself remains unless you fundamentally alter its topological structure. Similarly, the encoded quantum information in a topological code is protected by this non-local encoding. This robustness against local noise is a key reason why they are considered a leading candidate for building fault-tolerant quantum computers.

Key advantages of topological codes include:

- High error threshold: They can tolerate a significant amount of noise before logical errors occur.

- Local operations: Error detection and correction only require interactions between neighboring qubits, simplifying hardware design.

- Scalability: The 2D lattice structure is amenable to manufacturing and expansion.

- Intrinsic protection: Some topological approaches, like those based on Majorana fermions, could theoretically offer inherent error protection at the hardware level.

Despite their theoretical elegance, realizing topological codes in practice is incredibly complex. It requires precise control over large numbers of high-quality qubits and the ability to perform accurate syndrome measurements to detect errors. The engineering challenges associated with maintaining the delicate quantum correlations over vast networks of qubits are substantial.

Stabilizer Codes and Parity Checks

Another fundamental class of quantum error correcting codes are stabilizer codes. The surface code is a type of stabilizer code. These codes encode quantum information by defining a set of commuting “stabilizer” operators that collectively define the logical states. Measuring these stabilizers allows errors to be detected without disturbing the encoded quantum information.

The core idea is similar to classical error correction using parity checks. However, in the quantum realm, we’re not just correcting bit flips (X errors) but also phase flips (Z errors) and combinations of both (Y errors). Stabilizer measurements extract information about the error syndrome – a pattern that indicates which error occurred – without revealing the actual quantum state, thus preserving superposition and entanglement.

The process generally involves:

- Encoding: Creating an entangled state of multiple physical qubits to represent a single logical qubit.

- Syndrome Measurement: Performing specific measurements on auxiliary “ancilla” qubits that interact with the data qubits. These measurements reveal information about errors without collapsing the data qubit’s state.

- Decoding: Based on the error syndrome, a classical computer determines the most likely error and applies a corrective operation.

The efficiency and effectiveness of these processes are critical. Repeated syndrome measurements are necessary because errors can occur during the measurement process itself. This iterative process of detection and correction is what enables fault tolerance. The development of more efficient and less error-prone methods for syndrome measurement is a major area of research.

Achieving a breakthrough by late 2025 would likely involve demonstrating robust, multi-cycle error correction on a large enough scale (perhaps tens of logical qubits encoded across hundreds or thousands of physical qubits) to prove the viability of these architectural approaches for future fault-tolerant systems. This would mean surpassing the “break-even point,” where the overhead of error correction is outweighed by the benefits of noise reduction.

Algorithmic Advancements and Software Layers

While hardware development is crucial for quantum error correction, progress on the algorithmic and software fronts is equally vital. The raw ability to detect and correct errors on physical qubits is only one piece of the puzzle; these operations must be orchestrated efficiently and reliably by clever algorithms and robust software layers. This is where the interface between theoretical QEC codes and practical quantum computation truly takes shape.

The goal is to translate the abstract mathematics of quantum error correction into concrete, executable instructions for quantum processors. This involves developing sophisticated decoders that can interpret the error syndromes detected by hardware and infer the most probable error, as well as optimizing the quantum circuits themselves to minimize the chances of errors occurring in the first place.

Decoding Algorithms for Error Syndromes

Once an error syndrome is measured from the physical qubits, a decoding algorithm takes over. This classical algorithm’s job is to analyze the pattern of errors and determine the most likely error that occurred. The efficiency and accuracy of these decoders are paramount, as faster and more precise decoding directly translates to better overall system performance and reduced latency.

Decoders must handle a large amount of real-time data, often under strict time constraints. The complexity of these algorithms grows with the size of the quantum computer, requiring significant computational resources. Researchers are exploring various approaches to decoding, including:

- Minimum-weight perfect matching: A common algorithm for surface codes that seeks the minimum number of local errors consistent with the measured syndrome.

- Neural network decoders: Using machine learning to identify error patterns, offering potential for speed and adaptability.

- Custom hardware accelerators: Developing specialized classical hardware (e.g., FPGAs, ASICs) designed specifically for rapid quantum error decoding.

- Belief propagation: Probabilistic methods used in classical coding theory, adapted for quantum error correction.

The speed and accuracy of decoding algorithms are critical, especially as quantum systems scale. A slow decoder might introduce latency that allows new errors to accumulate before the old ones are corrected. Therefore, advancements in this area are as important as breakthroughs in qubit quality.

Optimizing Quantum Circuits for Robustness

Beyond active error correction, researchers are also focusing on designing quantum circuits that are inherently more robust against noise. This field, known as “quantum circuit optimization” or “design for error tolerance,” aims to minimize the occurrence of errors in the first place, thus reducing the burden on the error correction system.

Strategies include:

- Gate decomposition: Breaking down complex quantum operations into sequences of simpler, highly reliable gates.

- Scheduling and routing: Optimizing the order and placement of gates to reduce qubit idle times and minimize crosstalk between neighboring qubits.

- Dynamical decoupling: Applying sequences of pulses to qubits to actively suppress environmental noise without performing full error correction.

- Error-aware compilation: Compiling quantum algorithms in a way that is cognizant of the specific error characteristics of the underlying hardware, perhaps favoring operations that are known to be less noisy on a particular device.

These algorithmic advancements often work in tandem with hardware improvements. For instance, better qubit connectivity might simplify routing, while more coherent qubits enable longer dynamical decoupling sequences. The interplay between physical hardware, QEC codes, and software/algorithmic layers forms a complex ecosystem where progress in one area often influences the others.

For quantum error correction to be achievable by late 2025, it suggests that not only will certain hardware benchmarks need to be met, but the software and algorithmic infrastructure to efficiently manage and decode these error processes must also mature rapidly. This holistic progress is essential for transferring scientific breakthroughs into practical quantum computational capabilities.

The Role of Qubit Technology in Error Correction

The type of qubit technology used fundamentally influences the feasibility and approach to quantum error correction. Different physical implementations of qubits have distinct error characteristics, coherence times, and scalability challenges. While the theoretical principles of quantum error correction apply universally, their practical realization is heavily dependent on the underlying hardware platform. This diversity in qubit technologies reflects the ongoing search for the most viable path to fault-tolerant quantum computing.

Leading qubit technologies each present unique advantages and hurdles. Superconducting qubits, for example, offer high gate speeds and are amenable to microfabrication techniques, but require extreme cryogenic temperatures and face challenges with crosstalk and scalability of control lines. Trapped ions boast very long coherence times and high fidelity gates, but their scaling typically involves complex optical setups and shuttling of ions.

Superconducting Qubits and Transmons

Superconducting qubits, particularly transmons, are at the forefront of much of today’s quantum computing research and development. They are fabricated using standard semiconductor techniques, allowing for integration onto chips. These qubits utilize circuits made of superconducting materials (like aluminum) cooled to near absolute zero, at which point electrons pair up and conduct electricity without resistance. Qubits are formed by manipulating the energy levels of these superconducting circuits.

The advantages of superconducting qubits include:

- Fast gate operations: Speeds in the order of tens of nanoseconds, crucial for performing many operations within coherence times.

- Scalability via microfabrication: Potential for integrating many qubits on a chip, similar to classical microprocessors.

- High fidelity: Gate fidelities have surpassed standard error correction thresholds in single and two-qubit operations.

However, they face significant challenges: they are extremely susceptible to environmental noise, requiring exceptional shielding and cryogenic cooling. Crosstalk between neighboring qubits and the complexity of routing control lines for a large number of qubits also pose engineering hurdles. For error correction on these systems, the focus is on maintaining extremely low temperatures and pristine electromagnetic environments, alongside developing more robust gate operations and efficient measurement schemes to extract error syndromes.

Trapped Ions and Optical Qubits

Trapped ions represent another leading qubit technology, known for their exceptional coherence and high gate fidelities. Here, individual atoms are ionized and suspended in a vacuum using electromagnetic fields, forming a “string” or crystal of qubits. Lasers are then used to manipulate their internal energy levels, which serve as the qubit states, and to mediate interactions between them for multi-qubit gates.

The strengths of trapped ion qubits are:

- Long coherence times: Ions are naturally isolated from their environment, leading to very long periods before decoherence sets in.

- High gate fidelity: Some of the highest reported gate fidelities across multiple qubits have been achieved with trapped ions.

- Identical qubits: Each ion of the same species is inherently identical, simplifying manufacturing and reducing variability.

The challenge with trapped ions is scalability. As the number of ions increases, the complexity of precisely addressing and manipulating each one with individual laser beams grows significantly. While shuttling ions between different trap zones offers a path to scaling, it adds overhead and introduces its own error sources. For error correction, the long coherence times are a boon, as they allow more time for error syndrome measurements and correction operations before environmental errors accumulate.

The path to achieving QEC by late 2025 likely includes significant strides in one or more of these qubit platforms, potentially through hybrid approaches or discoveries that dramatically overcome their current limitations. Progress hinges on not just the number of qubits, but their quality (coherence time, gate fidelity) and our ability to precisely control them in an increasingly complex system. The engineering ingenuity required across these diverse technologies is immense, as is the scientific effort to push the boundaries of what is physically possible.

Benchmarks and Metrics for Error Correction Success

Defining “achievable” for quantum error correction by late 2025 requires clear benchmarks and metrics. It’s not simply about demonstrating small-scale error correction, but about reaching a point where the benefits of error correction outweigh its costs, paving the way for truly fault-tolerant quantum computation. This often involves specific thresholds and experimental demonstrations that signal a practical path forward.

The quantum computing community uses several key metrics to gauge progress in error correction. These include the coherence times of qubits, the fidelity of quantum gates (single-qubit and multi-qubit), and perhaps most importantly, the logical error rate compared to the physical error rate. The goal is to show that encoding information across multiple physical qubits effectively reduces the logical error rate significantly, ideally below current classical computational error rates.

The Error Threshold and Break-Even Point

The concept of an “error threshold” is fundamental to quantum error correction. It posits that for a quantum error-correcting code to be effective, the physical error rate of the qubits and gates must be below a certain value. If the physical error rate is above this threshold, no amount of error correction can remove all errors, and the logical error rate will actually increase due to the errors introduced by the error correction operations themselves.

Different QEC codes have different error thresholds. For example, the surface code is known for having a relatively high error threshold (around 1%) compared to other, more complex codes. This means that if physical gate error rates can be kept below this percentage, it is theoretically possible to achieve arbitrarily low logical error rates by scaling up the number of physical qubits per logical qubit.

The “break-even point” is a related, more practical metric. This is the point where the benefit of error correction (reducing logical errors) surpasses the overhead cost (the additional physical qubits and operations required for error correction). In essence, it’s where a logical qubit actually performs better than its constituent physical qubits. Reaching this milestone is a crucial experimental demonstration for any QEC architecture.

For a breakthrough by late 2025, demonstrating the break-even point for a significant number of logical qubits, showing that logical error rates are indeed lower than the physical error rates, would be a major indicator of success.

Scalability and Resource Overhead

Beyond theoretical thresholds and break-even points, practical error correction success hinges on scalability and resource overhead. Quantum error correction is incredibly resource-intensive; encoding a single logical qubit might require hundreds or even thousands of physical qubits. Furthermore, performing an elementary operation on a logical qubit can involve thousands of physical operations.

Key considerations for scalability:

- Number of physical qubits per logical qubit: How many physical qubits are needed for a practical level of error suppression? Lower numbers are better for scalability.

- Overhead for logical operations: How many physical gate operations are required to perform one logical gate operation? Fewer operations mean faster computations.

- Hardware complexity: Can the chosen QEC scheme be implemented efficiently with existing or foreseeable hardware architectures? This includes the routing of control signals, cooling requirements, and physical footprint.

- Decoding speed: As discussed, the classical decoding process must keep up with the quantum error detection.

A true breakthrough by late 2025 would likely involve a convincing demonstration that the resource overheads, while significant, are not insurmountable obstacles to building large-scale, fault-tolerant machines. This might involve new encoding schemes that are more resource-efficient or significant advances in physical qubit quality that reduce the number of physical qubits required for a given logical error rate. The economic viability of scaling these systems will also be a major consideration for commercialization.

Ultimately, the metrics for success are about proving that quantum error correction is not just theoretically possible but experimentally achievable and practically scalable. Late 2025 as a timeline suggests an anticipation of concrete demonstrations that move the field decidedly closer to the fault-tolerant era, going beyond isolated proof-of-concept experiments to platforms that truly offer a pathway to scalable quantum computation with low logical error rates.

Challenges and Roadblocks to Achieving QEC by 2025

While the prospect of achieving quantum error correction by late 2025 is exciting, the journey is fraught with formidable challenges. The complexity of these systems, the delicate nature of quantum phenomena, and the sheer engineering scale present significant hurdles. Overcoming these involves not just incremental improvements but often fundamental breakthroughs in materials science, control engineering, and theoretical understanding. The optimism for a 2025 breakthrough rests on the rapid acceleration of innovation across multiple disciplines.

The current state of quantum computing is characterized by “noisy” devices. Each operation, each interaction, and even the mere passage of time introduces errors. While some error rates have fallen dramatically in recent years, they are still far from the levels required for large-scale, fault-tolerant computation. These challenges are multifaceted, touching upon hardware, software, and fundamental physics.

Hardware Fabrication and Coherence

One of the most persistent challenges lies in the hardware itself. Manufacturing quantum chips with sufficient quality and scale is incredibly difficult. For superconducting qubits, this means fabricating devices with extremely low defect rates, perfectly uniform components, and highly reliable connections, all while maintaining the ultra-low temperatures necessary for superconductivity.

Key hardware challenges include:

- Qubit uniformity: Ensuring that each qubit and its connections behave identically, which is crucial for predictable operations and scaling.

- Reducing intrinsic noise: Further minimizing imperfections in materials and fabrication that contribute to decoherence and error during operations.

- Scaling control lines: As the number of qubits increases, so does the complexity of the wiring and control electronics required to address and manipulate each qubit individually. This can lead to increased heating and crosstalk.

- Cryogenics at scale: Maintaining extreme temperatures (millikelvin range) for thousands or millions of qubits requires sophisticated and expensive cooling infrastructure.

For trapped ions, scalability means developing complex ion trap arrays that can shuttle ions efficiently without introducing errors, and precisely directing thousands of individual laser beams. Even a single stray photon or an atom’s vibration can disrupt delicate quantum states. Improving qubit coherence times—how long a qubit can reliably hold quantum information—is an ongoing race against environmental decoherence. Longer coherence times allow more time for computation and error correction before information is lost, reducing the overall overhead.

Control Precision and Crosstalk

Manipulating qubits with high precision is another major hurdle. Quantum gates, the operations that transform qubit states, must be executed with extremely high fidelity. Any imprecision, whether in the timing, amplitude, or frequency of control pulses, can lead to errors. Achieving single-qubit gate fidelities above 99.9% and two-qubit gate fidelities above 99% is considered a baseline for many error correction schemes, and while labs are reaching these levels, maintaining them consistently across many qubits remains difficult.

Crosstalk, where performing an operation on one qubit unintentionally affects a neighboring qubit, is a pervasive problem. As qubits are packed more densely on a chip and operated simultaneously, preventing unwanted interactions becomes crucial. Mitigating crosstalk requires clever circuit design, precise pulse shaping, and often dynamic calibration techniques, which add complexity to the control system.

Challenges in control and crosstalk management:

- Pulse shaping: Designing optimized microwave pulses or laser pulses to execute gates rapidly and accurately while minimizing off-target effects.

- Calibration complexity: As systems grow, recalibrating tens, hundreds, or thousands of qubits after environmental fluctuations becomes a monumental task.

- Simultaneous operation: Precisely coordinating operations across many qubits in parallel without introducing interference.

Achieving quantum error correction by late 2025 will necessitate significant advancements in these areas, likely through the integration of AI-driven calibration, highly integrated control electronics (perhaps on-chip), and novel materials that reduce intrinsic noise sources. The interplay between these challenges means that progress in one area often depends on breakthroughs in another, making the overall timeline ambitious but not impossible, given the current pace of innovation.

The Road Ahead: What to Expect Post-2025

Even if a significant breakthrough in quantum error correction is achieved by late 2025, it wouldn’t signify the immediate arrival of a fully fault-tolerant, universal quantum computer ready for widespread commercial use. Instead, it would mark the beginning of a new era, transitioning from experimental demonstrations to the systematic engineering and scaling of these complex machines. The period post-2025 would likely be dedicated to refining these technologies, increasing their reliability, and making them more accessible.

The journey from demonstrating a functional quantum error correction scheme to building practical fault-tolerant quantum computers is akin to the early days of classical computing. Proving a transistor works in a lab is one thing; building a complex microprocessor with billions of transistors on a single chip is an entirely different level of engineering challenge. Similarly, the scaling and industrialization of fault-tolerant quantum systems will be a long and iterative process.

Scaling and Industrialization

One of the primary focuses post-2025 would be on dramatically increasing the number of physical qubits while maintaining or improving their quality and control. This involves moving beyond proof-of-concept laboratory setups to more robust, manufacturing-friendly processes. Industrialization implies the ability to produce quantum hardware consistently, at scale, and with high yields.

This includes:

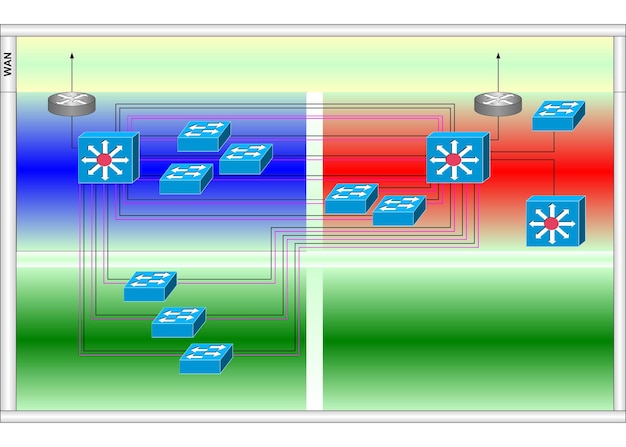

- Modular architectures: Developing ways to connect smaller quantum processors to form larger systems, akin to networked classical supercomputers.

- Advanced manufacturing: Implementing automated fabrication processes to produce high-quality quantum chips more efficiently and cost-effectively.

- Integrated control systems: Moving control electronics closer to the qubits, ideally on the same chip or within the same cryostat, to minimize noise and complexity.

- Standardization: Developing common interfaces and protocols for quantum hardware and software to foster a more interoperable ecosystem.

The development of a robust supply chain for quantum computing components and materials would also become crucial. This includes specialized cryogenic equipment, ultra-pure materials, and high-precision control electronics, all of which are currently niche markets but would need to expand significantly to support large-scale quantum computer production.

Development of Applications and Ecosystem

With the promise of fault-tolerant machines on the horizon, the emphasis would shift further towards identifying and developing real-world applications that truly leverage quantum advantage. While algorithms like Shor’s and Grover’s have been known for decades, their practical implementation requires fault-tolerant systems.

Post-2025, we can expect:

- Algorithm refinement: Optimizing existing quantum algorithms and discovering new ones tailored for fault-tolerant architectures.

- Software stack maturity: Developing more user-friendly programming languages, compilers, and simulation tools that allow researchers and developers to program fault-tolerant quantum computers without needing deep expertise in low-level quantum physics.

- Hybrid quantum-classical computing: Further integration of quantum processors as accelerators for specific tasks within larger classical computation workflows.

- Workforce development: Training a new generation of quantum engineers, programmers, and scientists equipped to design, build, and operate these sophisticated machines.

The period beyond 2025 would be an exciting time of practical exploration, moving beyond the question of “if” quantum error correction is achievable to “how” it can be industrialized and applied to solve the world’s most challenging computational problems. The breakthrough in late 2025, if it happens, would be a testament to human ingenuity and a powerful catalyst for the next era of quantum technology.

| Key Aspect | Brief Description |

|---|---|

| 🔬 Error Correction Goal | Protect fragile quantum information from noise, vital for practical quantum computers. |

| 🛡️ Key Approaches | Topological codes (e.g., surface code) and stabilizer codes are leading strategies. |

| 📈 2025 Outlook | Achieving the “break-even point” where error correction demonstrably reduces logical errors. |

| ⚙️ Main Hurdles | Hardware quality, qubit coherence, scaling, and precise control are major challenges. |

Frequently Asked Questions About Quantum Error Correction

▼

Quantum error correction (QEC) is a critical technique designed to protect quantum computations from errors caused by noise and decoherence. Unlike classical computers, quantum information is fragile and easily corrupted. QEC is essential because without it, building large-scale, reliable quantum computers capable of solving complex problems remains impossible.

▼

Classical error correction often relies on duplicating information, which is forbidden in quantum mechanics by the no-cloning theorem. Quantum error correction encodes information redundantly across multiple entangled qubits, allowing errors to be detected and fixed without destroying the delicate quantum state itself. It corrects not just bit-flip errors but also phase-flip errors.

▼

Achieving “break-even” means demonstrating that the benefits of quantum error correction outweigh its costs. Specifically, it signifies reaching a point where using a quantum error-correcting code on logical qubits actually results in a lower error rate than the physical qubits themselves, proving the code effectively protects quantum information from noise.

▼

Both superconducting qubits (like transmons) and trapped ions are currently leading candidates for implementing quantum error correction. Superconducting qubits offer fast gate speeds and scalability via microfabrication, while trapped ions boast long coherence times and high gate fidelities. Each has unique challenges in scaling and noise mitigation.

▼

Achieving error correction by late 2025 would be a major milestone, but not the end. The next phase would involve scaling up the number of logical qubits, industrializing manufacturing processes, further optimizing quantum algorithms, and developing a robust software and hardware ecosystem. This would pave the way for building fully fault-tolerant, universal quantum computers.

Conclusion

The question of whether a significant breakthrough in quantum error correction is finally achievable by late 2025 isn’t just speculative; it underscores the intense global effort and rapid advancements transforming the field of quantum computing. While formidable challenges remain, particularly in scaling hardware, enhancing qubit coherence, and perfecting control mechanisms, the scientific community is making unprecedented strides. If the forecasted breakthroughs materialize, they would herald a new computational era, fundamentally shifting quantum computing from a theoretical marvel and noisy experimental devices to a tangible, fault-tolerant technology. This pivotal achievement would not be an endpoint, but rather a robust beginning, setting the stage for the industrialization of quantum computers and the exploration of their transformative potential across science, technology, and industry.